NIST AI RMF vs ISO/IEC 42001 Crosswalk

Welcome back to Responsible AI Review, your weekly signal on AI governance, safety, and sustainability in agentic systems and beyond.

Curated by Alexandra Car, Chief AI & Sustainability Officer at BI Group.

Explore past editions here or join the conversation here.

Thanks for reading! Subscribe for free to receive new posts and support my work.

From Risk Principles to Auditable Practice

Most organisations now realise that AI governance can’t be improvised. Risk registers, codes of conduct, and policy checklists aren’t enough, not when regulators, investors, and auditors are watching. What’s missing is coherence across frameworks.

Two standards now dominate that space: the NIST AI Risk Management Framework (AI RMF) and the ISO/IEC 42001 AI Management System. One encourages structured thinking, the other demands operational rigour. Together, they offer a path from internal maturity to external accountability. But they’re not interchangeable, and too many teams treat them as if they were.

At BI Group, we work with both. We help clients move beyond checkbox compliance, translating abstract principles into measurable controls. This comparison breaks down where NIST and ISO align, where they diverge, and how to use them in combination to build a governance system that can hold under pressure.

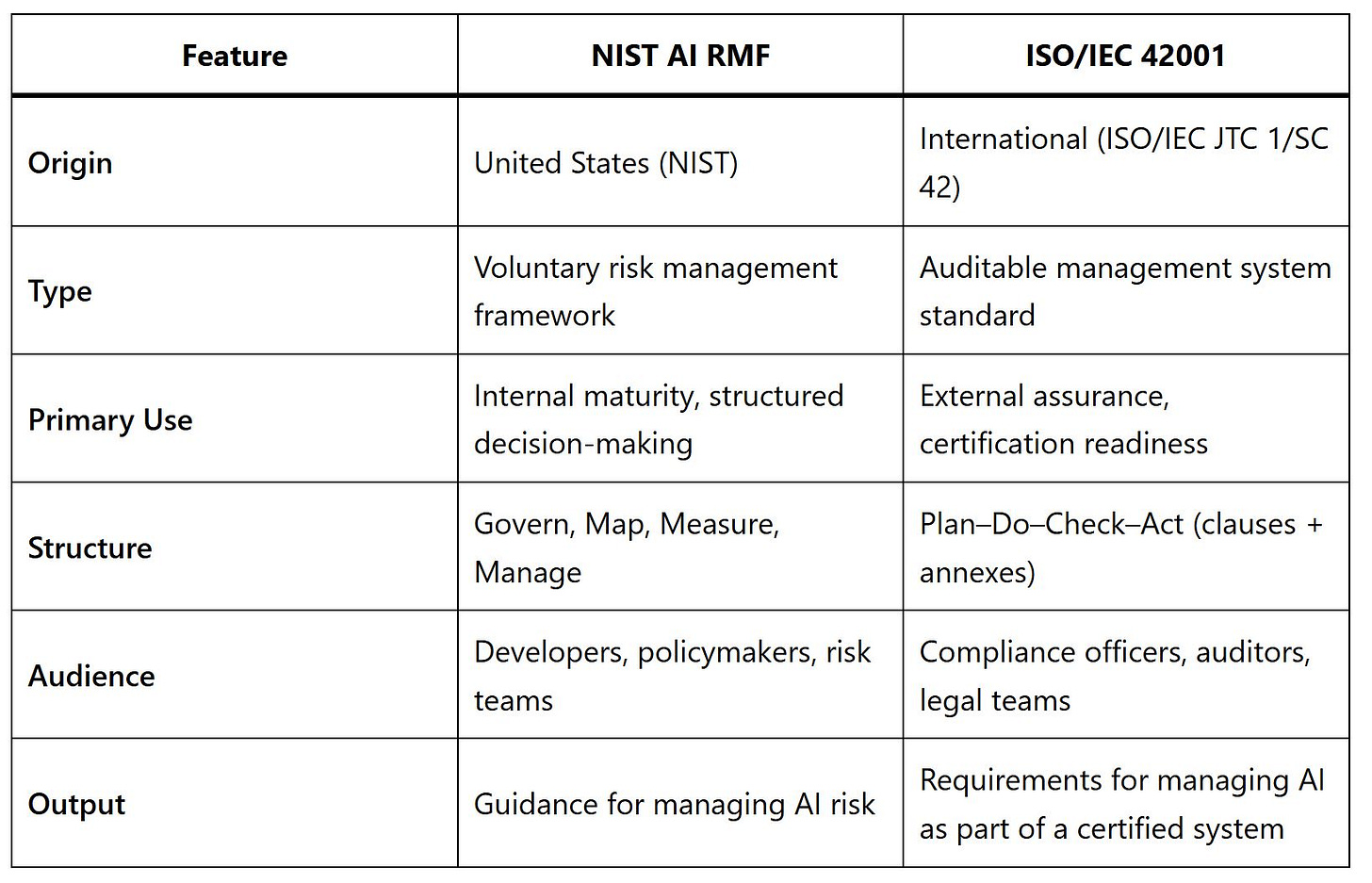

Quick Comparison Snapshot

NIST AI RMF and ISO/IEC 42001 serve different purposes, but they often end up on the same desk. Here’s how they compare at a glance:

Used in isolation, each has gaps. NIST gives room to explore risk, but no formal system for demonstrating control. ISO requires artefacts, accountability, and review cycles, but says less about culture and context. Most teams need both.

Where They Align

Despite their different origins, NIST AI RMF and ISO/IEC 42001 overlap across critical governance areas. In practice, both frameworks ask similar questions, just with different levels of precision and enforcement.

Below are key areas where the alignment is strongest:

1. Roles, Accountability, and Leadership

Both frameworks expect clear roles across the AI lifecycle.

NIST frames this under the “Govern” function: responsibilities, communication lines, and leadership accountability. ISO formalises it in Clauses 5.1 (Leadership), 5.3 (Roles), and Annex B.3.2 (AI-specific functions), with requirements to document, assign, and review those roles regularly.

Teams cannot delegate this downstream. Leadership must own AI-related risks, and show they’ve planned for them.

2. Policy, Objectives, and Intended Use

NIST calls for AI objectives and risk tolerances to be defined. ISO requires the same, but links them directly to documented AI policy (Clause 5.2), risk treatment plans (Clause 6.1), and stakeholder expectations (Clause 4.2).

This alignment is essential. Whether deploying a recommendation engine or a model affecting critical infrastructure, both frameworks ask: What is this system for, and what does ‘acceptable’ look like?

3. Risk Management Across the Lifecycle

The risk treatment cycle, identification, assessment, mitigation, and review, is present in both frameworks. NIST covers this in its “Map” and “Manage” functions. ISO embeds it in Clauses 6.1.2–6.1.4 and 8.2–8.4, along with the requirement to maintain artefacts that demonstrate the process was followed.

4. Post-Deployment Monitoring and Improvement

NIST promotes ongoing measurement and feedback, especially under its “Measure” function. ISO takes this further with internal audits (Clause 9.2), management reviews (9.3), and continual improvement (10.1).

5. Stakeholder Engagement and Impact Assessment

Each framework includes mechanisms to understand and respond to societal, individual, and environmental impacts. NIST flags this in its mapping and measuring steps. ISO addresses it more formally:

Clause B.5.4 (impact on individuals and groups)

Clause B.5.5 (societal impact)

Clause B.8.3 (external reporting)

Both require teams to move beyond internal views and engage users, affected communities, and partners.

Where They Differ

NIST AI RMF and ISO/IEC 42001 were built for different goals. That becomes obvious the moment you try to operationalise them side by side. Knowing where they diverge saves time, avoids duplication, and prevents false assumptions about compliance coverage.

1. Approach: Framework vs System

NIST offers a conceptual structure for managing AI risks. It’s designed to be adapted, partial, and lightweight, useful for early-stage AI governance or internal alignment across teams.

2. Auditability and Certification

NIST is not certifiable. It helps teams ask the right questions and design defensible processes, but there is no external assurance attached to implementation.

3. Level of Prescription

NIST gives principles and functions, leaving room for interpretation. It doesn’t dictate what a risk register must contain or how incident response should be documented.

4. Integration with Broader Management Systems

ISO/IEC 42001 was explicitly designed to sit alongside other ISO standards, 27001 (security), 9001 (quality), 31000 (risk). Clause numbering, terminology, and structure follow ISO conventions. This makes it easier for organisations already running integrated management systems.

5. Depth on Societal Impact and Sustainability

Both frameworks touch on societal impacts. But ISO goes further, requiring documentation of environmental impact, fairness, and societal risk assessments. These are reflected in Clauses B.5.5 (societal impact), B.5.4 (individual impact), and B.12 (sustainability-related clauses), aligning with broader ESG and CSRD requirements.

When to Use One or Both

Choosing between NIST AI RMF and ISO/IEC 42001 isn’t just a matter of preference. It’s a question of timing, context, and compliance exposure. In most cases, organisations will need to use both, just not all at once.

Early-Stage or Low-Risk Use Cases

Use NIST AI RMF as your entry point. It’s ideal for organisations building internal AI governance from the ground up. You can use it to map your systems, define roles, document assumptions, and build a risk culture across teams.

NIST works well when you're still shaping your processes, not yet ready for formal certification but needing a shared language to move forward.

High-Risk Systems or Regulated Sectors

If your AI falls under high-risk classification (e.g. health, finance, public services, critical infrastructure), you will need to align with ISO/IEC 42001.

This becomes even more important if you’re operating across Europe, where the EU AI Act expects organisations to have formal risk and quality management systems in place.

ISO isn’t just for show, it’s your evidence base when things go wrong, or when regulators ask for proof.

Multinational or Procurement-Driven Environments

For organisations operating across borders or responding to tenders, both frameworks often appear together. NIST provides a flexible baseline to align internal teams. ISO delivers the documentation and audit trail external stakeholders expect.

Use NIST to model and stress-test your AI risks

Use ISO to formalise, review, and demonstrate how those risks are being managed

Bridging Strategy and Execution

Many organisations treat strategy and operations as separate domains. NIST and ISO can serve as a bridge:

NIST drives alignment at the design and intent stage

ISO ensures that alignment is maintained through system performance, stakeholder engagement, and continual improvement

In our view, that’s the most important takeaway:

Use NIST to think. Use ISO to deliver.

BI Group Practitioner Guidance

Many frameworks look good on paper. These two are no exception. But in practice, aligning NIST AI RMF with ISO/IEC 42001 takes more than side-by-side reading.

Start with what’s real. List your current AI systems, their intended uses, and who is responsible for each. Don’t begin with the framework, begin with the system.

Don’t over-engineer NIST. It’s not a compliance tool. Use it to sharpen thinking, structure risk conversations, and identify where visibility is missing.

Use ISO for what it’s built for. If you’re deploying AI at scale, or under regulatory pressure, ISO 42001 will force consistency, roles, records, and reviews.

Translate, don’t duplicate. Avoid building two parallel systems. Where NIST asks for “risk identification,” and ISO asks for “Clause 6.1.2,” link them. One intent, two expressions.

Document as you go. ISO requires artefacts. Don’t wait until an audit request arrives. Build the traceability as a living process, start with change logs, training records, and stakeholder communications.

Use NIST to test the logic. If a risk treatment plan looks complete under ISO but makes no sense when mapped back to NIST’s functions, stop and fix it. You need both rigour and relevance.

We’ve published a complete, section-by-section crosswalk mapping every NIST AI RMF function to its corresponding ISO/IEC 42001 clause. Designed for teams that need to get things done, not just tick boxes, it provides immediate clarity for implementation, compliance, and audit preparation.

What’s Next in the Series

This is the first article in BI Group’s new comparison series,

EU AI Act vs ISO/IEC 23894

Risk engineering meets legal compliance. What each demands, and what they don’t.OECD Principles vs UNESCO AI Ethics

Soft law, voluntary guidance, and how to make them useful inside an organisation.Model Cards vs Fact Sheets vs Data Sheets

Transparency artefacts compared. Which one delivers accountability, and which one stays in the drawer.CSRD/ESRS vs AI Governance

Connecting sustainability disclosure with algorithmic impact.

Each will include a downloadable matrix or alignment tool, available via the BI Group website.

Applying This in Practice

Frameworks only help when they connect to action. NIST AI RMF gives you the language to think clearly about AI risk. ISO/IEC 42001 gives you the structure to prove you’re managing it. Most teams need both, but very few know how to make them work together.

This isn’t about choosing one over the other. It’s about building an AI governance approach that holds up under regulatory scrutiny, stakeholder pressure, and operational complexity.

At BI Group, we build that bridge, so AI isn’t just trusted in theory, but in practice.

Thank you for reading, and helping shift the conversation!

If this sparked new thinking, share it with a colleague who leads with integrity in AI.

🚀 Stay ahead in Responsible AI and Sustainability

🔗 Follow me for insights in Responsible AI & Sustainability → https://rb.gy/4qw8u4

🔗 Visit my official website→ https://alexandracarvalho.com/

📩 Subscribe to the Responsible AI Review LinkedIn Newsletter → https://rb.gy/2whpof

🌐 Join the Responsible AI & AI Governance Network LinkedIn Group → https://rb.gy/i2sxdo

♻️ Join the AI-Driven Sustainability Network LinkedIn Group → https://rb.gy/rht9xa

📬 Subscribe to my Substack newsletter for grounded RAI and sustainability insights →